Data science projects exist to reproducibly generate knowledge from data.

That is, the unifying purpose of all the tools at our disposal from exploratory data analysis and visualizations, through statistical modeling and all the way to bleeding edge deep learning architectures with mammoth parameter spaces, is to create new knowledge. We are, broadly speaking, either learning directly via inference or demonstrating by construction that our black box can solve a given problem. Excellence demands that we be transparent with our process, so much so that another person could reproduce it, scrutinize it, tinker with it and improve it.

GNU Make, the classic and long-standing build utility, makes these goals fabulously easier to achieve.

In the example project created for this post, we’ll download NeurIPS abstracts, transform them a bit, and finally fit a latent Dirichlet allocation model. Throughout, we’ll use Make to orchestrate this process. Among the many benefits we’ll cover:

- A natural way to express the dependencies among steps in a training pipeline. (Read, “an escape from ‘shoot are these notebook code cells in the right order…’ hell.”)

- A nudge toward modularity: Easy dependency management encourages us to break our procedural monoliths into tiny, beautiful temples.

- Easily leverage utilities: Since Make orchestrates commands for us, we can leverage anything installed on the host. Want to process text with sed before downstream tasks in python? Lemon squeezy, Fam.

- Faster iterations: Make helps us avoid needlessly repeated computation, speeding our innovation cycles and helping us build faster.

- Easier external collaboration: By relying on a collection of scripts rather than notebooks, and expressing their dependencies, Make helps us turn over a spec to our friends in data engineering without much added work.

The example project follows the DS cookie cutter released by DrivenData. This template first keyed me into the value of Make for DS, and it’s an efficiency level up in its own right.

What is Make

(Experienced users of Make, freely skip this section—you’ll be bored.)

Traditionally and by design, Make is a build tool for executables. From GNU.org:

GNU Make is a tool which controls the generation of executables and other non-source files of a program from the program’s source files. Make gets its knowledge of how to build your program from a file called the makefile, which lists each of the non-source files and how to compute it from other files. When you write a program, you should write a makefile for it, so that it is possible to use Make to build and install the program.

It’s typical to see Make files in C++ projects, for example. But this is its most common use, not a limitation. More from GNU:

Make figures out automatically which files it needs to update, based on which source files have changed. It also automatically determines the proper order for updating files, in case one non-source file depends on another non-source file. As a result, if you change a few source files and then run Make, it does not need to recompile all of your program. It updates only those non-source files that depend directly or indirectly on the source files that you changed. […] Make is not limited to building a package. You can also use Make to control installing or deinstalling a package, generate tags tables for it, or anything else you want to do often enough to make it worth while writing down how to do it.

Summarized, Make manages the process of checking which files need to be created or re-created: If nothing has changed since the last time and output was produced, we can skip the step of re-creating it. And it can be used to manage any series of commands we’d like to make reproducible.

This very naturally lends itself to analysis or machine learning projects. Consider this pretty standard data science workflow:

- Get some data, via file download or querying a database.

- Process the data for cleaning, imputation, etc.

- Engineer features.

- Train and compare a series of model architectures or configurations.

Over the course of this very common DS project cycle, we make dozens of decisions about how to process what, which hyper parameters to try, different methods of imputation, and more.

Ease of expression

Each of these steps can be naturally expressed in a Make rule. For example, say we need to download a file like the NeurIPS dataset from the UCI ML Repository . We might download it with e.g. curl. This makes for a straight-forward Make rule:

data/raw/NIPS_1987-2015.csv:

curl -o $@ https://archive.ics.uci.edu/ml/machine-learning-databases/00371/NIPS_1987-2015.csv

In the language of Make, this target file we want to create has no prerequisites, just a recipe to create it. That $@ is a Make automatic variable that fills in the name of the target. Navigate to the root of the demo project folder, run make --recon data/raw/NIPS_1987-2015.csv and you should see the commands Make will run to produce the file:

curl -o data/raw/NIPS_1987-2015.csv https://archive.ics.uci.edu/ml/machine-learning-databases/00371/NIPS_1987-2015.csv

Run make data/raw/NIPS_1987-2015.csv without the --recon flag and that command will actually run. Run it again and you’ll get this message, Make’s way of telling us not to waste our time downloading it again:

make: `data/raw/NIPS_1987-2015.csv' is up to date.

We see that the raw file is column-wise, and we want to transpose it. We’ll put the transposed version in data/interim, jot up a lightweight script to perform the op, and express the dependency and execution in another rule:

data/processed/NIPS_1987-2015.csv: src/data/transpose.py data/raw/NIPS_1987-2015.csv

$(PYTHON_INTERPRETER) $^ $@

Here we used $^, another automatic variable that fills in all of the prerequisites. Another call to Make using the --recon flag, make data/processed/NIPS_1987-2015.csv --recon, will show us the commands we’ll see on a real run, namely that it’ll create the target file using a python script we’ve written:

python3 src/data/transpose.py data/raw/NIPS_1987-2015.csv data/processed/NIPS_1987-2015.csv

And when it comes time to train a model, this step naturally depends on the processed data:

models/%_topics.png: src/models/fit_lda.py data/processed/NIPS_1987-2015.csv src/models/prodlda.py

$(PYTHON_INTERPRETER) $< $(word 2, $^) $@ --topics $*

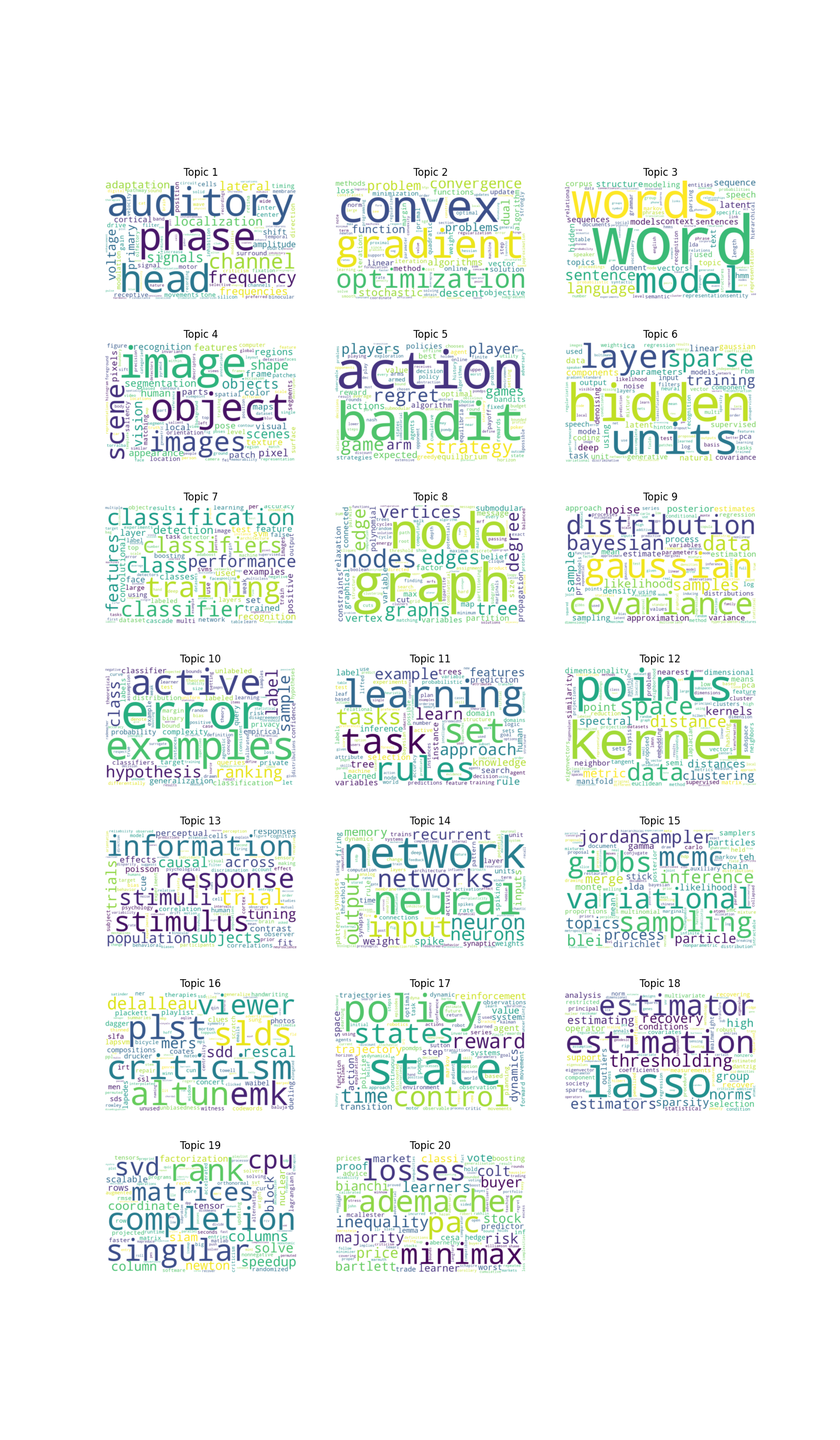

Here, we’ve written fit_lda.py to train a model and create a word cloud for each topic, which we capture in the png target file. That’s simplified for the sake of example. In a typical, professional build, our targets would be much more comprehensive: We’d include learning curves, summaries of error on each segment of our dataset, and anything else we need to empirically compare different model architectures. We’re also leveraging pattern rules, using the character % in the target, along with Make’s word macro.

We’ll cover more on these in the section on modularity.

Each of these steps has natural dependencies on prior steps, and Make gives us a natural way of expressing them.

Faster iteration

Make handles the dependency relationships for you. Say you’ve written the scripts and steps to process your data, and saved the results to a file. You’re now at the stage of training a model. As you tinker with your training script, make Recognizes that the timestamp of the training data file has not changed, and skips the step of reproducing it.

Now, imagine that during this model development, you learn of an edge case indeed a pre-processing we didn’t account for. Very common occurrence.

Naturally, we update the script that performs our pre-processing operation. Make recognizes this script as a prerequisite of the file containing the processed data, and when you next run the command to train your model, Make will recognize that the data needs to be re-created. When all prerequisites are older than the target, Make skips the rebuild steps.

This kind of thing happens dozens of times over the course of a data science project. Making it faster can greatly increase your iteration speed. Edmund Lau writes about iteration speed in The Effective Engineer, applied in a software engineering setting, but the key takeaway carries over to data science: “The faster you can iterate, the more you can learn.” For my part, avoiding this kind of needless repetition has made projects much more efficient.

Encouraged modularity

Of course, you could use Make to execute a single script that did all of this in one go. It does nothing to enforce modularity as we’ve implemented it in this example.

But each of these steps takes time, and the more you have to repeat them the slower your iteration speed will be. Who wants to spend the extra time waiting to re-process their data? There are plenty of ways to avoid these extra reprocessing steps, but Make makes it easy.

And there’s great power in being able to flexibly create rules. For example, say you’re comparing several model configurations. In a neural network, you might vary the number of hidden units in a few layers, very drop out parameters, etc. Make offers an easy way to save each of these and compare them.

In our topic modeling example, at the start we likely have no idea how many topics will lead to an interpretable, sensible set of topics. We can make quick work of this using the pattern rule we wrote to train our models. Here it is again:

models/%_topics.png: src/models/fit_lda.py data/processed/NIPS_1987-2015.csv src/models/prodlda.py

$(PYTHON_INTERPRETER) $< $(word 2, $^) $@ --topics $*

A pattern rule makes it easy to declare rules to build many files using the same general execution pattern. In our case, we use the automatic variable $* to include the stem matched in the actual execution, and we use it as the number of topics in our topic model. Combine that with a phony target all, and we can train multiple models using a single make all run:

all: models/10_topics.png models/20_topics.png

Then we can compare the output of both.

Leverage utilities

Say you have a thousand text files, all with the same 20 lines of boilerplate that needs to be removed. Boring, right? Also easy with Make:

data/processed/%.txt: data/raw/%.txt

sed 1,20d $^ > $@

By Just like we relied on curl earlier, we can use whatever we want to process our data, and whoever reads our Makefile will know exactly how we created that processed data. Easy, reproducible, and transparent.

Ease of collaboration

There are, at least in my experience, as many answers to “how do we get our models to prod?” as there are organizations and architectures. Many (most?) organizations have years of data, and legacy constraints to go along with them. This can mean that as much as we’d like to be adopting the latest seamless MLOps framework, we can find ourselves collaborating with software or data engineering teams.

Often, these teams have been trained by experience that “Can you help the DS team deploy this?” often translates to, “Can you take this poorly documented notebook that documents none of the assumptions that went into the model build, and somehow turn it into a maintainable build process? Stand on the opposite side of that fence while I throw it over ktxbye!”

Make is no magic wand to wave away any of these challenges. But, a well-constructed model pipeline orchestrated in Make already expresses a process as a DAG. By explicitly specifying prerequisites, a Make rule shows precisely what must precede a given step. In this way, even if our model build must be rebuilt in some other environment, our Makefile gives a detailed specification of that that process.

“Sure, we can help,” our software or data engineering friends begin, stifling a shudder. “Can you walk us through your model training/build process?”

“Totally, if you clone our repo, navigate to project and run make all with the -B and --recon flag, it’ll show you how all the steps are executed. I’ll shoot a link to the repo hold on…”

When they run that command—the -B flag tells Make to just remake all targets, even if they aren’t out of date—they’ll see everything we have to do to build our models:

curl -o data/raw/NIPS_1987-2015.csv https://archive.ics.uci.edu/ml/machine-learning-databases/00371/NIPS_1987-2015.csv

python3 src/data/transpose.py data/raw/NIPS_1987-2015.csv data/processed/NIPS_1987-2015.csv

python3 src/models/fit_lda.py data/processed/NIPS_1987-2015.csv models/10_topics.png --topics 10

python3 src/models/fit_lda.py data/processed/NIPS_1987-2015.csv models/20_topics.png --topics 20

Why do you hate notebooks, you monster?

I emphatically don’t, I swear. They’re incredibly useful as communication tools—I’d rather write, or read, a sleek notebook over a slide deck any day—and for interactive, DS thought jotting. “Oh when i plot this we see some collinearity…let’s calculate and print R-squared…” is the kind of thing I mumble to myself many mornings. I love all notebooks, both IPython and physical ones filled with pretentiously, needlessly high-quality paper. (I was an English major.)

I imagine Jeanette Winterson filled dozens of physical notebooks while she was writing The Passion. Nevertheless, at the end of that process she instead published a novel, concise and unified and beautiful and enchanting. You, my dear data scientist, are likewise an artist of ambition and vision, and we do ourselves a disservice when we confuse our final medium with the sketched out thoughts that get us there.

And tortured metaphors aside, notebooks are a version control nightmare.

Wrapping up

We’ve gone over how Make can make your life easy, by breaking up your workflow into modular chunks, and making the orchestrated run through all of them and easily reproducible process. But we’ve just scratched the surface of make and its capability of. Here are a few handy resources on taking these skills even further.

- The full example project shows how individual scripts were written to complete each of the steps discussed above.

- It’s built from this cookie cutter, a fabulously opinionated way to set up projects based on fabulous, well-reasoned opinions. It’s well worth a thorough read, especially for its succinct argument of how simple conventions can level up your modeling productivity and reproducibility.

- This great book can help folks dive into the full power of GNU Make, which we’ve only waded into here. Its primary focus is on traditional software projects, but this is no barrier: It thoroughly presents Make’s capabilities and gives a thorough, guided introduction. If you’re looking to spare yourself the gotchas that come with trying to learn new tech solely from the docs, this could be a good buy.